A Moonshot for humanity

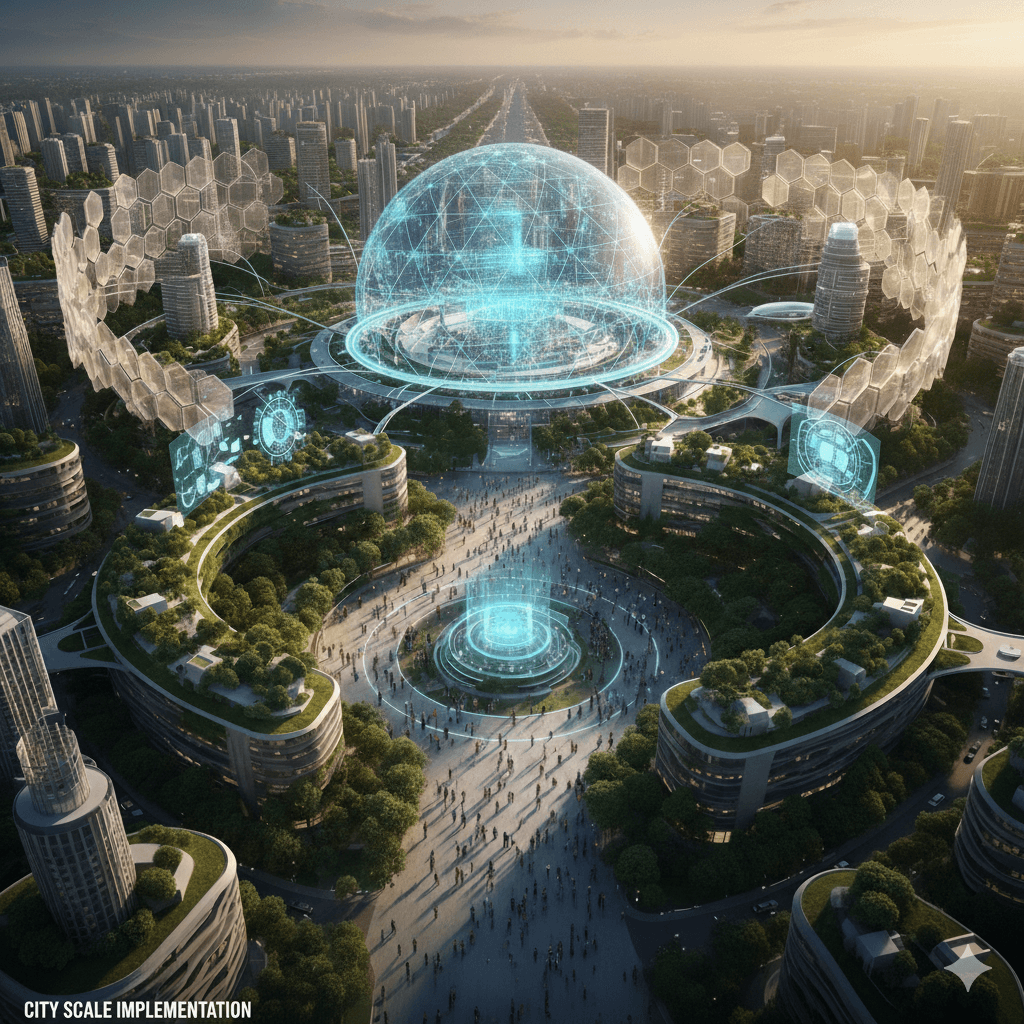

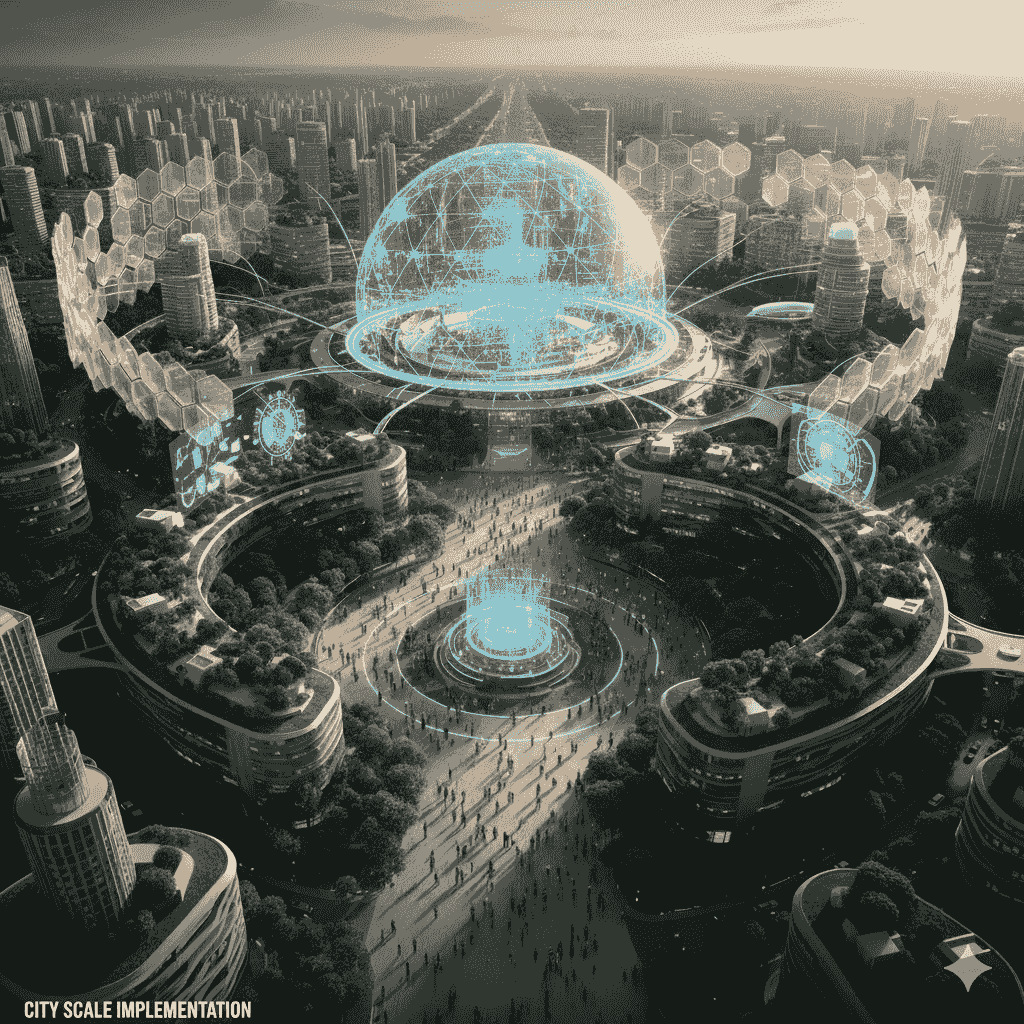

A digital physical city enabled with safe Universal Basic intelligence for all the residents. A 70 acre living lab for AI safety research for Agentic and Physical AI at the heart of tech scene at Bangalore. A place for humans to achieve their full potential. By 2029, it will be home to 25000 AI researchers and innovators.

AGI or Artificial Jagged Intelligence(AJI) : why it matters?

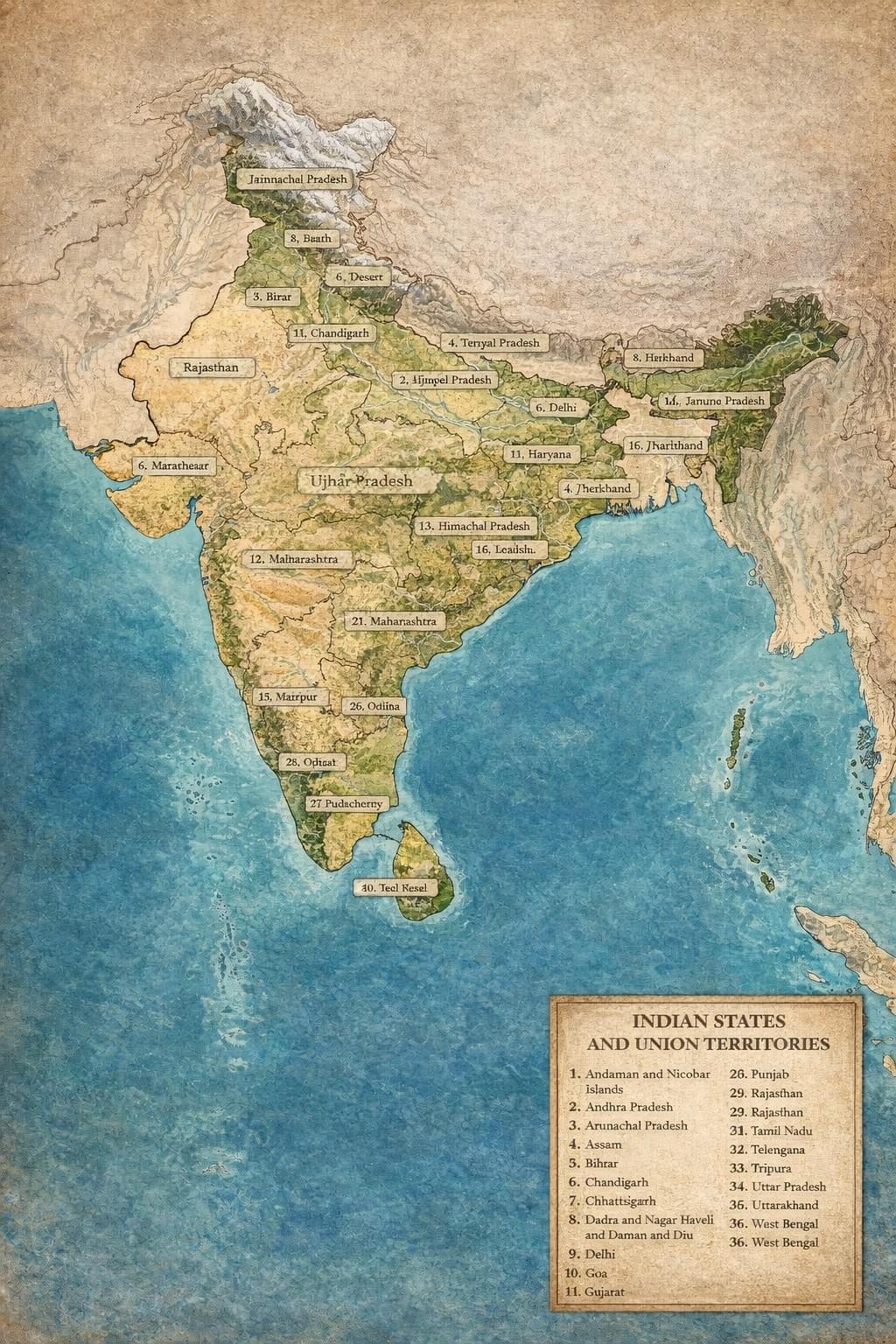

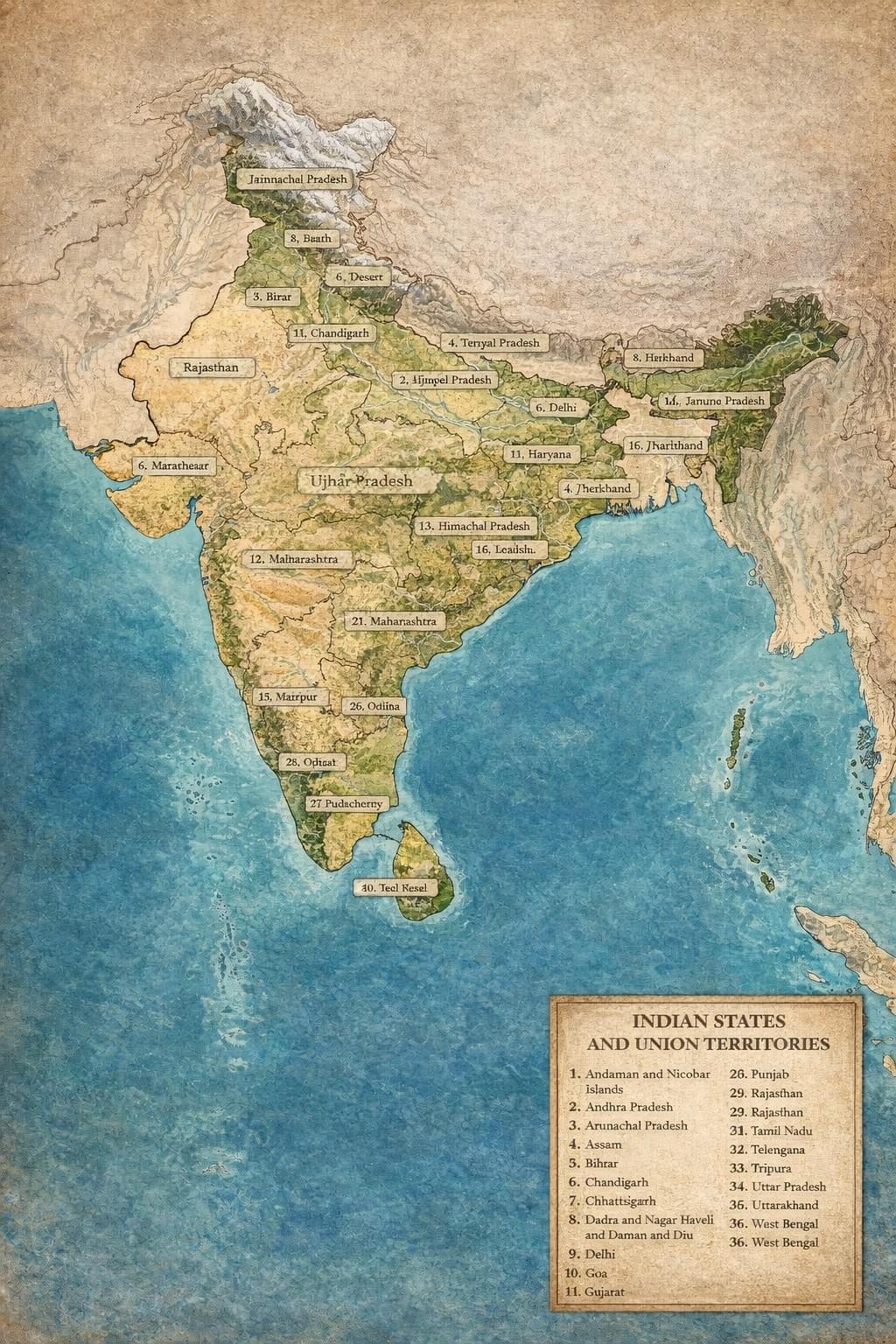

The mad race to get to AGI/ASI is real and frighteningly fast, however our current AI models are far from foundational (See the map of India drawn by the most expensive model trained in the AI history. You can try it yourself too with your favorate AI chat, ask it to draw a map of India), even though they have been labeled as foundational. Look at the cost that we’re already paying with our internet, which is slowly dying with the rapidly accelerating AI slop. The public internet is being now swamped by 16+ billion poor quality AI-generated images (34 million being added daily) rending it unfit for developing the future models. 52% of the articles on the internet are written by AI and realistic looking AI generated videos are everywhere, drowning human signal and breaking the risk–reward model for human creators. Almost 77% of the organizations are concerned with AI hallucinations and these increase in reasoning models. Next evolution of AI is agentic AI—systems that can act on their own. Lets take a step back and understand the true risks.

Today’s models are astonishing intelligent and broken at the same time. They can design a chip, debug a driver, and summarise every paper on a topic – and then fail at basic causal reasoning or misread a simple real-world situation. Or misscount alphabets in simple words like straberry or garlic or not draw the map of India correctly, the very notion of Bharat (you can try this at home yourself, as whatever AI model that you are using to draw the map of India). This is jagged intelligence: extreme spikes of capability together with deep valleys of brittleness. And we’re giving that jagged intelligence agency prematurely without understanding the size and scope of risk. “We are taking an intelligence that hallucinates, that overestimates its own certainty, and we’re giving it the keys to: your cloud account, your bank account, your factory, your city and eventually your life.”

The risk isn’t a Hollywood apocalypse to be enjoyed with a popcorn on your movie night. It’s something more mundane and real world dangerous: imagine millions of small, confident, slightly wrong actions rippling through logistics, infrastructure, finance, cities and eventually our societies. While it’s easy to spin up millions of these agents almost at a flip of a switch it’s hard to control them reliably. These so called foundation models still hallucinate in a complex workflows and agents can turn rouge, can get corrupted or can be highjacked by malicious actors to add the scare. Shipping autonomy on top of this kind of instability and unreliability is like pouring concrete on the quicksand. A perfect receipe for disaster. And imagine that this Agentic AI will prove foundational for next evolution, the physical AI, allowing us to fill our precious earth with robots and machines which will work autonomously. Without adequate safety testing and validation of Agentic AI and Physical AI in real world and anchoring them with human in the loop, we are heading into a massive crisis almost blindfolded.

The jaggedness didn’t matter as much when the AI only produced text on a screen to be read by a human being. It becomes existentially important when this AI can act. And unlike the internet, we only get one Earth, a tiny pale blue dot. We need to make this transition safely and humanly responsible way by grounding this AI properly in real world. The missing piece isn’t more web data or crazy amount of compute or bigger models. It’s actual experience – coherent, temporal, grounded experience of a real world that has continuity, consequences and humans in the loop. Our next research quests in pursuit of AGI of combining LLMs with world models are irrelevant, unless these approaches are based on dynamic datasets, which mimic the real world closely. Inshort we need a city scale simulator, which is generating data about environment, citscapes, buildings, people who are living in it, AI agents, robots and all of them synced through time scale.

To make sure that we are able to build the city scale databed to help bring to life real world grounded AI and to get to validate the foundations of man-machine society of future safely in a humanity first model, we are building an AI innovation city at Bangalore (code named B1 AI city). A city, built from ground up specifically for AI research and innovation, inhabited by AI builders from across the globe. Unlike web data that is full of noisy snapshots, memes and things that may not be really meaningful in a day to day life, AI City generates data in one continuous, connected story about real people, real places, real actions and their consequences. Imagine a 70-acre City as a living, breathing “AI factory for the physical world”. A home to 25,000 AI researchers & builders on campus, each with agentic digital twins (software agents), achored with digitized human identity, allowing for some kind of universal basic intelligence(UBI) at city scale, and physical AI twins (robots, AVs, embodied systems) working seamlessly together. A fully sensed and simulated innovation district: buildings, streets, logistics, energy, mobility together with humans – all mirrored in a city-scale world model. This single city potentially could generates on the order of 1 exabytes of multimodal data per year. However it is not just about the scale of data, but unlike web scrape, this world model data is: 1. Coherent – same people, places, and assets across text, code, video, sensors, BIM, logs. 2. Temporal – full histories of tasks, decisions, actions, and outcomes. 3. Action-linked – every byte tied to real objectives in robotics, logistics, mobility, buildings, and governance. It’s the perfect data substrate to move from “chatbots that talk” to agents and robots that reliably do and potentially co-exists with us without ripping apart the fabric of our society.

To enable this massive moonshot we need AI startups, research labs, AI researchers and AI innovators to come together with ecosystem builders, who are aligned to the ethos of building a safe man-machine society. If you are one of them, we are looking for you. Lets build this future together.

Our northstar : Instead of hoping for autoalignment, building AI with humanity first grounding

- Stop assuming auto-alignment, build to extend human society. Artificial Intelligence, just like human intellligence is dual edged. Alignment must be carefully thought of, specifically tested, and enforceable at scale to be able to work together with humanity.

- Anchor agency to identity. If an action affects people, it must map back to a verified human digital identity and explicit consent—or it shouldn’t ship.

- Fix value flow. Without a creator-first risk–reward ledger, we are systemically extracting from humans to subsidize the growth of machines. That’s untenable and will lead to unsafe transition. We must have a way to keep rewarding the AI trainers and aligners to make sure that we have a better version of our society, not worse.

- Make safety measurable in real world. Hallucinations, jailbreaks, and misuse aren’t mere PR issues anymore; as AI emerges from the lab into the real world, they are engineering and governance liabilities. We need to evaluate in real world, red-team, publish and keep monitoring, just like we do with human intelligence. While early AI relied on benchmarks to guide the development, we much have real life test bed to ensure that it works in real world, is safe and can be trusted.

The 7 layered humanity first AI research and innovation ecosystem that we’re building

- Seed-Linked Identity Layer: Bind agent actions, permissions, and provenance to digitized human identity and revocable consent. This will enforce accountability by design.

- Risk–Reward Ledger: Transparent, auditable routing of value to creators across media, code, data, and models. Humans get paid first.

- Safety Stack for Agents: AI agent ecosystem that has capability limits, continuous evaluations, incident response, and recall—before scale, not after harm.

- Open, interoperable standards: Open protocols anyone can implement. No walled gardens. No black boxes dictating public reality.

- Safe Agentic AI and Physical AI evolution: Agentic AI will further scale into physical AI, it is important that we lay the foundations of physical AI in a similar open, safe and responsible way.

- AI multimodal test data for AI research. What is means is a continuous streams of perception, action, and feedback. That makes it possible to train agents that understand cause and effect, not just pattern matching.

- AI research city to house above efforts and more together with the AI community that is excited about building AI for real world, ecosystem for AI safety and contineous monitoring. We fundamentally believe that AI safety in real world can't be an afterthought, the systems has to be thought of before hand with a humanity first perspective, before we unleash AGI into our society.

This is a moonshot movement, not a pitch, please join

We aim to mobilize 10,000+ AI founders and researchers across AI startups, corporate innovation labs, university ecosystems, and policy partners in 10+ partner nations—to build together with India’s 20mn+ massive developer community at the core—to make this the default way AI is built for real world, the safe transition. Its brutally tough, almost impossible to do, however for the sake of the pale blue dot, we must do it.

Why this AI grounding matters, before its too late

- Trust: If you can’t trust what you see or who made it, society decays slowly but surely.

- Dignity: Humans must be recognized—and rewarded—for their labor and creativity. Safe transition is better than jobless chaos that current AI revolution promises. .

- Safety: Agentic systems need achoring in human identity, hard limits, and recall.

- Sovereignty: Communities and countries deserve control over how AI uses their data and culture.

What we strive for : A Humanity first AI Pledge for the builders

1. We will not ship unaccountable AI agents and physical AI. They must be tethered to real human identities and grounded in real world data.

2. We will not normalize creator strip-mining. Risk -reward to human creators must hold other the society will be in chaos.

3. We will not ship without real world validation and testing.

4. We will not accept “move fast and pray alignment” as strategy.

5. We will recognize human ingenuity and belief in limitless human potential.

All this is not just daydreaming, we are building it on the ground, today. Our first pitstop is a 500,000 sq feet of AI innovation space, B1 AI Superpark, inbuilt with lightening speed connectivity (upto 400 GBps) to all AI clouds with <1ms latency. This would enable AI model training without the associated pain of moving data around slowly. Located in the heart of Sarjapura tech ecosystem in Bangalore, its the perfect place for technology builders to come together with AI researchers. This is where we are pulling together the biggest gathering of global AI community that can help build this humanity first AI future together.

Aligned with AI summit happening in India, we are also launching the " humanity first AI city moonshot" in feb 2026, targetting,

1. Founders & builders: To help ship identity-aware AI agents and provenance tools. We are building the AI innovation infrastructure and data and research databed for AGI research and AI safety, however we need AI builders and researchers to exploit it fully.

2. Universities & AI research labs: To co-lead open AI sandboxes and evaluations and research into real world AI usecases and safety.

3. Corporates: To research agentic AI safety, pilot the ledger, co-fund standards, open and operate real-world testbeds.

4. Policy & civil society: To help write societal AI guardrails that protect people without freezing progress.

We choose a humanity-first path. If you do too—join us and sign the pledge. Lets shape the terms of our man-machine society, safely and responsibly, with grounded AI agents and robots—before they get set for us. Join us as we build the future, a humanity first AI future together.

Moonshot for humanity

3 big problem areas we are looking for collaboration. If you are working in these areas, we are here to collaborate, align capital and infra around them

Open Agentic and Physical AI foundations

Our current so called foundation models are hardly foundational. They rely too heavily on the understanding of the world through internet data. They might be useful for language and reasoning, but their understanding of the current world is patchy and jagged at best. We need true foundation models that work in real life that Agentic and physical AI can rely to build upon. We need world models that can work with scale and precision, while being sustainable from energy perspective.

Safe Basic Universal Intelligence

We envision a time when everyone of us will have an AI agent or digital twin to work with. Eventually this would manifest in physical AI too. How can we enable that vision to happen, safely and universally? How would a safe universal basic intelligence stack would look like? How do we power and provide compute for such a scale? How do we govern these systems?

Safe and open agentic AI browser with seedlinked human identity

For jagged intelligence to work on tasks and workflows, we need to tie them to humans for training and alignement with societal laws and frameworks. Also for making the agentic internet governable, we need to create systems that society can oversee and align with our current societal framework. We need to create safe and open agentic browser, value aligned with human workflows.

Join the moonshot

If you are an AI startup or AI research lab or Global enterprise focused on AI transformation, or just an AI innovator, join the moonshot.

Foundational Partners

The early partners who are helping us launch this moonshot

ARF

The Airawat Research Foundation (ARF) is a Section 8 not-for-profit company established by IIT Kanpur to act as India's National Centre of Excellence for Artificial Intelligence in Sustainable Cities. It translates AI research into practical solutions for urban challenges like air quality, mobility, and waste management, supported by the Ministry of Education.

SPARC FRO

SPARC is a mission-focused autonomous research organization built to develop foundational technologies that ensure a secure and sovereign digital future. It works at the intersection of agentic AI and new security primitives such as confidential computing. Agentic systems can act, reason, and coordinate autonomously at scale.

BITS Pilani

Birla Institute of Technology & Science, Pilani (BITS Pilani) is a premier private deemed-to-be university in India, renowned for higher education and research in engineering, sciences, and management. Founded in 1964 and recognized as an "Institute of Eminence," it has campuses in Pilani, Goa, Hyderabad, Mumbai, and Dubai.

IIT Ropar

IIT Ropar is located in the district of Rupnagar, the city formerly known as Ropar, which possesses great historical importance. The city dates back to Harappa – Mohenjo-daro civilization located east of Satluj River. It is recognized as an Institute of National Importance, offering B.Tech, M.Tech, and Ph.D. programs with a strong focus on research, innovation, and sustainability, ranking among the top engineering schools in India.

RGA

RGA Software Systems Pvt Ltd is a leading developer of Grade A commercial office space in Bangalore, India, with over 10 million sq ft developed. Specializing in sustainable IT parks and built-to-suit assets, they serve global corporations, with notable projects including Pritech Park, RGA Tech Park, and Surya Projects.

ISPIRT Foundation

The iSPIRT Foundation (Indian Software Product Industry RoundTable) is a non-profit, volunteer-driven technology think-and-do tank that acts as an ecosystem builder for the Indian software product industry. It focuses on transforming India into a product hub by developing "Digital Public Infrastructure" (DPI) such as Aadhaar, UPI, and OCEN to solve complex societal problems.

Co-founding Team

Deep experience across AI, entreprenuership, investing and infrastructure

Umakant Soni

Umakant is an AI pioneer building the AI ecosystem as AI founder, builder and investor, having spent 25 years in tech and 16 years in AI space. He is deeply motivated by building the humanity centric foundation for AI future. He earlier cofounded India's first AI chatbot company, Vimagino in 2009-10. He also cofounded pi ventures (India's first AI focused fund) & ARTPARK (AI & Robotics Technology Park) and was the founding CEO of the same. Umakant is alumnus of IIT Kanpur and likes to meditate.

Subhashis Banerjee

Subhashis is an entrepreneur and investment banker from his background and has previously worked for Standard Chartered Bank Real Estate Private Equity Fund, Tata Housing Development Company, Sequoia Capital, Tishman Speyer Private Equity Fund. He also confounded and served as CIO(Chief Investment Officer) at ARTPARK, growing an ecosystem of 19+ AI startups. An alumnus of IIT Kanpur and IIM Calcutta he has deep interest in wildlife preservation.

Sireesh Kupendra

Sireesh works at the intersection of artificial intelligence, infrastructure, and urban futures. He is the Director of RGA Infra, overseeing a 10+ million square foot real-asset platform across India. He is also a co-founder of the KReate Foundation, working on urban planning and sustainable city design, with a long-term vision to build AI-native cities that can serve as global templates for human-centric development in future. He is an alumnus of University of California at Davis.

Get everything that you need to immerse yourself into the AI future

World class infra for innovators

Largest AI innovation campus for creating World class infrastructure with a plug and play model for AI innovators. It ensures that AI startups and large companies are able to create AI co-innovation labs easily and at warp speed.

AI training ecosystem for cutting edge AI

Cutting edge connectivity infra with 400gb/s connectivity to AI clouds with <1ms latency. Now train foundational models, refine them and inference them without a glitch

Get access to world class AI mentors and startup ecosystem

50,000 sq training lab for AI innovators powered by leading AI innovators, research labs, universities and NVIDIA to get upto speed with Agentic AI and Physical AI

Contact us

B1 AI Superpark - RGA Tech Park, Block no. 4, Sarjapura

Select...